Exploration vs Exploitation

A colony of bees knows a garden of roses nearby. This garden is their primary source of nectar and pollen. There might be another garden far from their hive which might contain a variety of flowers. Going to that garden demands a lot of time and energy. Should this colony of bees continue bringing nectar and pollen from nearby rose garden or should they risk searching for better options.

We have four agents. Each of them can take three actions in a single episode. These three actions lead to different rewards. The goal of agents is to gain maximum reward in 10000 episodes.

Four different agents come up with four different strategies. They are:

- Agent I : Greedy : Always exploit

- Agent II: Random: Always Explore

- Agent III: Epsilon greedy : Almost always greedy and sometimes randomly explore

- Agent IV: Decaying Epsilon Greedy: First Maximize Exploration and then exploitation

Three Bandits in code

import numpy as np

import random

def three_arm_bandit(action):

if action == 0:

value = np.round(random.gauss(10,5))

elif action == 1:

value = np.round(random.gauss(20,3))

elif action == 2:

value = np.round(random.gauss(90,1))

else:

print("This action is not allowed")

return valueAgent I : Greedy : Always exploit

action_values = np.zeros((3,1))

total_action_values = np.zeros((3,1))

action_count = np.zeros((3,1))

episodes = 10000

total_value = 0

for i in range(episodes):

#choose the action with highest value

bandit = np.argmax(action_values)

#print("The chosen bandit: ",bandit)

#call the function to get a new value for choosen action

value = three_arm_bandit(bandit)

#print("The value: ",value)

action_count[bandit] = action_count[bandit]+1

#print("Action count of the particular bandit: ",action_count[bandit])

total_action_values[bandit] = total_action_values[bandit]+value

action_values[bandit] = total_action_values[bandit]/action_count[bandit]

#print("Action value of the particular bandit: ",action_values[bandit])

total_value = total_value + value

#print("\nEnd of the episode")

print("Total value:",total_value)

avg_value = total_value/episodes

print("Average value:",avg_value)

print(action_count)

print(action_values)Average reward gained per episode: 9.9558

Agent II: Random: Always Explore

action_values = np.zeros((3,1))

total_action_values = np.zeros((3,1))

action_count = np.zeros((3,1))

episodes = 10000

total_value = 0

for i in range(episodes):

#randomly choose the action

bandit = random.randint(0, 2)

#call the function to get a new value for choosen action

value = three_arm_bandit(bandit)

action_count[bandit] = action_count[bandit]+1

total_action_values[bandit] = total_action_values[bandit]+value

action_values[bandit] = total_action_values[bandit]/action_count[bandit]

#print(action_values[bandit])

total_value = total_value + value

avg_value = total_value/episodes

print("Average reward gained per episode:",avg_value)Average reward gained per episode: 39.7575

Agent III: Epsilon greedy : Almost always greedy and sometimes randomly explore

def epsilon_greedy(epsilon):

action_values = np.zeros((3,1))

total_action_values = np.zeros((3,1))

action_count = np.zeros((3,1))

episodes = 10000

total_value = 0

epsilon = epsilon

for i in range(episodes):

#epsilon

random_value = random.randint(0,10)

if(random.randint(0,100)<epsilon):

#randomly choose the action

bandit = random.randint(0, 2)

else:

#choose the action with highest value

bandit = np.argmax(action_values)

#call the function to get a new value for choosen action

value = three_arm_bandit(bandit)

action_count[bandit] = action_count[bandit]+1

total_action_values[bandit] = total_action_values[bandit]+value

action_values[bandit] = total_action_values[bandit]/action_count[bandit]

#print(action_values[bandit])

total_value = total_value + value

avg_value = total_value/episodes

return avg_value

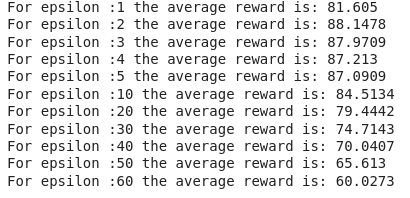

epsilons = [1,2,3,4,5,10,20,30,40,50,60]

for epsilon in epsilons:

avg_value = epsilon_greedy(epsilon)

print("For epsilon :"+str(epsilon)+" the average reward is: "+str(avg_value))

Agent IV: Decaying Epsilon Greedy: First Maximize Exploration and then exploitation

#At the beginning the agent explores 10 % of time

#The exploration decrease with 1% after each 1000 episodes

import math

action_values = np.zeros((3,1))

total_action_values = np.zeros((3,1))

action_count = np.zeros((3,1))

episodes = 10000

total_value = 0

for i in range(episodes):

#epsilon

random_value = random.randint(0,10)

epsilon = math.ceil(episodes/(i+(episodes/10)))

#print(epsilon)

if(random.randint(0,100)<epsilon):

#print("choosing randomly in episode :", i)

#randomly choose the action

bandit = random.randint(0, 2)

else:

#choose the action with highest value

bandit = np.argmax(action_values)

#call the function to get a new value for choosen action

value = three_arm_bandit(bandit)

action_count[bandit] = action_count[bandit]+1

total_action_values[bandit] = total_action_values[bandit]+value

action_values[bandit] = total_action_values[bandit]/action_count[bandit]

#print(action_values[bandit])

total_value = total_value + value

avg_value = total_value/episodes

print("Average reward gained per episode:",avg_valueAverage reward gained per episode: 88.3668

Here the agent III and IV has better average than others. The third action has higher average than others. The agent which figures out this and then exploit it, is the winner.

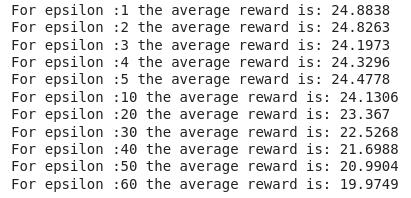

Let us change the action and rewards little bit and see what happens.

def three_arm_bandit(action):

if action == 0:

value = np.round(random.gauss(10,1))

elif action == 1:

value = np.round(random.gauss(25,1))

elif action == 2:

value = np.round(random.gauss(15,5))

else:

print("This action is not allowed")

return value

Performance of Agent I

Average reward gained per episode: 10.0047

Performance of Agent II

Average reward gained per episode: 16.7323Performance of Agent III

Performance of Agent IV

Average reward gained per episode: 24.7583

Here second action has the highest mean. Average reward of Agent III and IV are closer to this value while rewards gained by Agent I and Agent II are far from this value.