Stock Price Prediction with CNN-BGRU Model

In this post we are writing code to forecast the stock price of companies listed in NEPSE (Nepal Stock Exchange) according to CNN-BGRU method mentioned in this paper.

The user is asked to enter the stock symbol of the desired company and the future. Here future means the duration of forecast in days. For example, future =0 means the model will forecast the stock price of tomorrow.

The application will train the model when the user pushes the train button. The app will also show graph which compares the actual and predicted values. The app will also save the trained model.

User can then get the forecast with a press of a button.

Links

Create a virtual environment

mkdir Nepse_CNN_BGRU

cd Nepse_CNN_BGRU

python3 -m venv venv

source venv/bin/activateCreating a git repository

gh repo create

#Then walk through

#For more instructionsBasic installation

#install streamlit

pip3 install streamlit

#library for web scrapping

pip3 install requests_html bs4

#install scikit-learn

pip3 install -U scikit-learn

#install tensorflow

python3 -m pip install tensorflow

#install scipy

python3 -m pip install scipyCreate few necessary file

touch main.py interpolate.py rnn_models.py scrapper.py process.pyScrapper.py

This library has function price_history, which takes in stock symbol and start date as the argument and returns the closing price of that stock since the ‘start_date’.

from bs4 import BeautifulSoup

from requests_html import HTMLSession

#using regex to format string

import re

import pandas as pd

from datetime import datetime

import numpy as np

def price_history(company_name,Start_date):

# initialize an HTTP session

session = HTMLSession()

url = "https://www.nepalipaisa.com/Modules/CompanyProfile/webservices/CompanyService.asmx/GetCompanyPriceHistory"

max_limit = 5000

# Start_date = "2011-12-15"

#End_date = "2020-12-15"

End_date = datetime.today().strftime('%Y-%m-%d')

data = {"StockSymbol": company_name, "FromDate": Start_date, "ToDate": End_date, "Offset": 1, "Limit": max_limit}

res = session.post(url, data=data)

result = res.text

#removing comma

result = result.replace(',', '')

# removing <anything> between < and >

a = re.sub("[\<].*?[\>]"," ", result)

# this will return float and int in string

d = re.findall("(\d+(?:\.\d+)?)", a)

print(d[-20])

real_max = int(d[-20])

close_price = []

start = 5

for i in range(real_max):

close_price.append(float(d[start]))

start = start + 20

dates = []

start = 14

for i in range(real_max):

temp = d[start]+ "-"+d[start+1]+"-"+d[start+2]

dates.append(temp)

start = start + 20

#Puting scrapped closed price with date in dataframe

lst_col = ["Date","Close"]

df = pd.DataFrame(columns = lst_col)

df['Date'] = dates

df['Close'] = close_price

#Putting oldest data at the start of dataframe

df = df.iloc[::-1]

#setting date as index

df = df.set_index('Date')

df = df.dropna()

#replacing zero with previous data

#df = df['Close'].replace(to_replace=0, method='ffill')

df = df['Close'].mask(df['Close'] == 0).ffill(downcast='infer')

#print(type(df))

df = df.to_frame()

#print(type(df))

#df.plot(figsize=(16, 9))

return df

process.py

This takes the stock price of the company in the form of panda dataframe along with future and lookback period and returns numpy array suitable for tensorflow model along with mean and standard deviation of data.

import numpy as np

import pandas as pd

from sklearn import preprocessing

def make_frame(input_frame, scaled_frame,rows,columns):

for j in range(rows):

count = 0

for i in range(0+j,columns+j):

input_frame[j][count] = scaled_frame[i][0]

count = count + 1

return input_frame

def data_for_model(company_df,Look_back_period,future):

company_df = company_df.fillna(method='ffill')

numpy_array = company_df.values

close_array = numpy_array

entries_total = close_array.shape[0]

mean_std_array = np.array([close_array.mean(),close_array.std()])

mean_std_scaler = preprocessing.StandardScaler()

close_scaled = mean_std_scaler.fit_transform(close_array)

rows = Look_back_period

columns = entries_total-(rows+future)

company_input = np.zeros(shape=(rows,columns))

company_input = make_frame(company_input,close_scaled,rows,columns)

company_input = company_input.T

company_output = np.zeros(shape=(columns,1))

for i in range(rows,(columns+rows)):

company_output[i-rows][0] = close_scaled[i+future][0]

#combining all arrays

features = 1

company_input_3d = np.zeros(shape=(columns,rows,features))

company_input_3d[:,:,0] = company_input

return_list = []

return_list.append(mean_std_array)

return_list.append(company_input_3d)

return_list.append(company_output)

return return_listrnn_models.py

This library contains various functions:

- Model_CNN_BGRU : The tensorflow model itself

- model_prediction : For prediction

- MAPE: Calculate MAPE between actual and predicted prices

- final_prediction: Prediction of future

import pandas as pd

import numpy as np

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

from tensorflow.keras.layers import LSTM, Bidirectional, GRU, Conv1D,Conv2D,MaxPooling1D

def Model_CNN_BGRU(X,Y,Look_back_period):

model = Sequential()

model.add(Conv1D(100, (3), activation='relu', padding='same', input_shape=(Look_back_period,1)))

model.add(MaxPooling1D(pool_size=2,strides=1, padding='valid'))

model.add(Bidirectional(GRU(50)))

model.add(Dense(1))

model.compile(loss='mean_squared_error',optimizer='adam',metrics = ['accuracy'])

print(X.shape)

model.fit(X,Y,epochs=100,batch_size=64,verbose=0)

model_cnn_bgru = model

return model_cnn_bgru

def model_prediction(data,model,company_df,Look_back_period,future):

L = Look_back_period

f = future

a = data

mean_std = a[0]

mean = mean_std[0]

std = mean_std[1]

# 5 is no of features

ran = company_df.shape[0] - (L+f)

company_df = company_df.reset_index()

#Dataframe to record

column_names = ["Date", "Actual","Prediction"]

record = pd.DataFrame(columns = column_names)

#print(record)

for i in range(ran):

count = i+L

tail =company_df[i:count]

#print(tail)

# setting date as index

tail = tail.set_index('Date')

numpy_array = tail.values

predict_input = np.zeros(shape=(1,L,1))

for i in range(L):

predict_input[:,i] = numpy_array[i]

predict_scaled = (predict_input-mean)/(std)

print("Shape of predict scaled")

print(predict_scaled.shape)

#load model

prediction = model.predict(predict_scaled)

predict_final = (prediction*(std)) + mean

count = count + f

date = company_df['Date'][count]

actual = company_df['Close'][count]

list_predict = [date,actual,predict_final[0][0]]

series_predict = pd.Series(list_predict, index = record.columns)

record = record.append(series_predict, ignore_index=True)

#converting to datatime format

record['Date'] = pd.to_datetime(record['Date'], infer_datetime_format=True)

print(type(record['Date'][0]))

return record

def MAPE(record):

num = record.shape[0]

record['error'] = abs((record['Actual']-record['Prediction'])/record['Actual'])

sum2 = record['error'].sum()

MAPE = sum2/num

return MAPE

def final_prediction(company_df,lookback,data,model):

mean_std = data[0]

mean = mean_std[0]

std = mean_std[1]

last_data = company_df.tail(lookback)

last_data = last_data.values

predict_scaled = (last_data-mean)/(std)

predict_scaled = predict_scaled.reshape((1,lookback,1))

prediction = model.predict(predict_scaled)

predict_final = (prediction*(std)) + mean

return predict_finalinterpolate.py

According to this paper the lookback period suitable for different types of prediction are as follows:

- Short term (Tomorrow): suggested lookback period = 5 days

- Mid term (After 15 days) : suggested lookback period = 50 days

- Long term (After 30 days) : suggested lookback period = 100 days

For other futures i.e value between 0-15 and 15-30, the lookback period is calculated using cubic-spline interpolation with the help of scipy library.

from scipy.interpolate import CubicSpline

import numpy as np

def find_lookback(future):

x = [0, 15, 30]

y = [5, 50, 100]

# use bc_type = 'natural' adds the constraints as we described above

f = CubicSpline(x, y, bc_type='natural')

x_new = future

y_new = int(f(x_new))

print(y_new)

return y_newmain.py

This is the main file containing the code for streamlit

import streamlit as st

import pandas as pd

from tensorflow.keras.models import load_model

#Importing my own libraries

from scrapper import price_history

from process import data_for_model

from rnn_models import Model_CNN_BGRU

from rnn_models import model_prediction

from rnn_models import final_prediction

from rnn_models import MAPE

from interpolate import find_lookback

def main():

#setting starting date on 1st jan 2018

Start_date = "2018-10-01"

#setting default value of lookback period and future

lookbackperiod = 5

future = 0

#Any empty dataframe

company_df = pd.DataFrame()

#The title

st.title('CNN-BGRU method of Stock Price Prediction')

#Asking using to enter the symbol listed in NEPSE

company_name = st.text_input('Enter Company name')

if(company_name != ""):

#Asking user to enter the future

future = st.text_input('Enter future')

if(type(future) == str and future != ""):

future = int(future)

#Setting the lookback period for different type of futures

if(future == 0):

lookbackperiod = 5

elif(future == 15):

lookbackperiod = 50

elif (future == 30):

lookbackperiod = 100

#using cubic spline interpolation to calculate lookback period

elif (type(future) == int):

lookbackperiod = find_lookback(future)

else:

future = 0

try:

#calling function to get price history

company_df = price_history(company_name,Start_date)

except:

print("An exception occurred")

#Printing the basics

st.subheader('Head of Data')

st.dataframe(company_df.head())

st.subheader('Tail of Data')

st.dataframe(company_df.tail())

st.write("Length of data")

st.write(len(company_df))

st.write("Length of lookback period")

st.write(lookbackperiod)

#converting the dataframe to data ready for tensorflow model

data = data_for_model(company_df,lookbackperiod,future)

X = data[1]

Y = data[2]

#Press this button to train the model

#Along with training, we also compare the predicted and actual price

#save the model

model_name = 'model_cnn_bgru_'+company_name+"_"+str(future)+".h5"

if st.button('Train and save Model'):

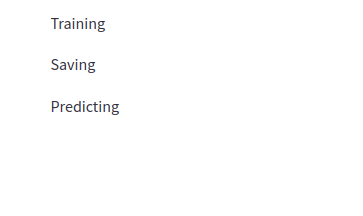

st.write('Training')

model_CNN_BGRU = Model_CNN_BGRU(X,Y,lookbackperiod)

st.write('Saving')

model_CNN_BGRU.save(model_name)

st.write('Predicting ')

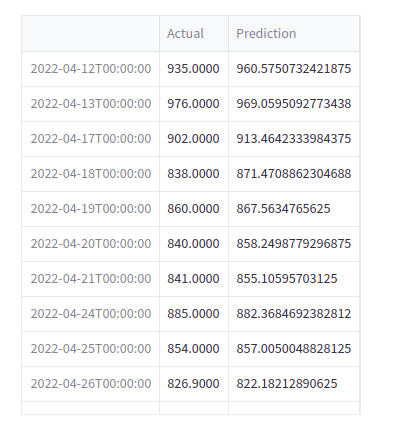

record = model_prediction(data,model_CNN_BGRU,company_df,lookbackperiod,future)

record = record.set_index('Date')

st.write(record)

st.line_chart(record)

error = MAPE(record)

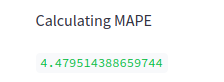

st.write('Calculating MAPE')

error = error*100

st.write(error)

#When this button is pressed our model will finally predict the stock price in required future

if st.button("Predict for future"):

#model_name = 'model_cnn_bgru_'+company_name+"_"+str(future)+".h5"

model = load_model(model_name)

prediction = final_prediction(company_df,lookbackperiod,data,model)

prediction = "This model predicts that the stock price of " + company_name + " after "+str(future+1)+" days "+"is "+str(prediction[0][0])

st.write(prediction)

if __name__ == '__main__':

main()Run the streamlit

streamlit run main.pyOutput

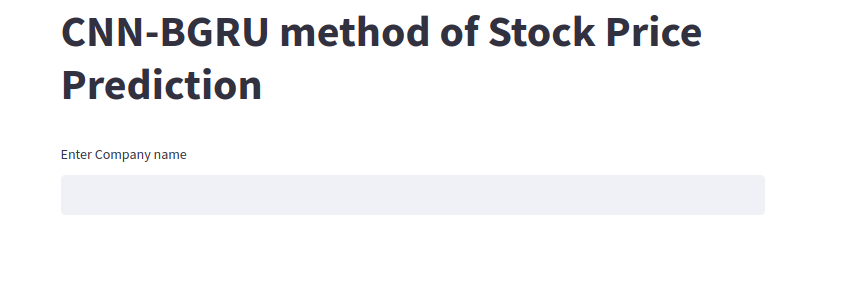

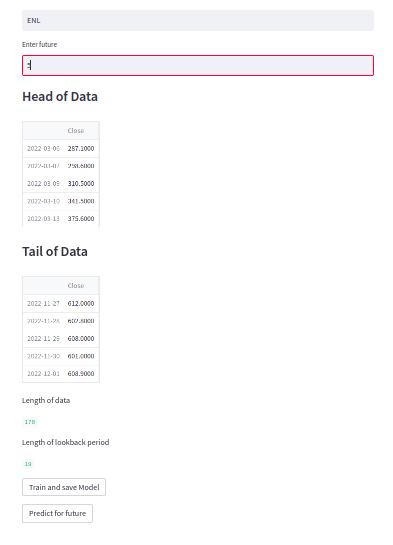

Landing Page

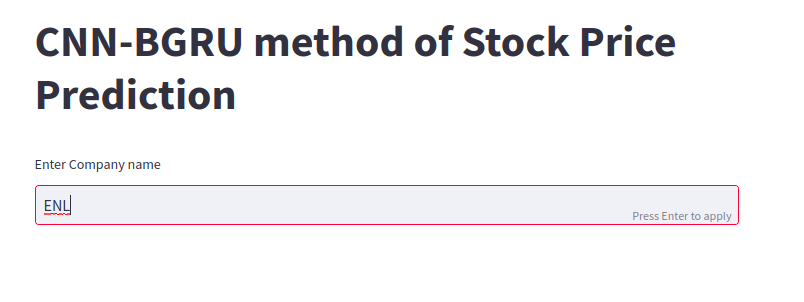

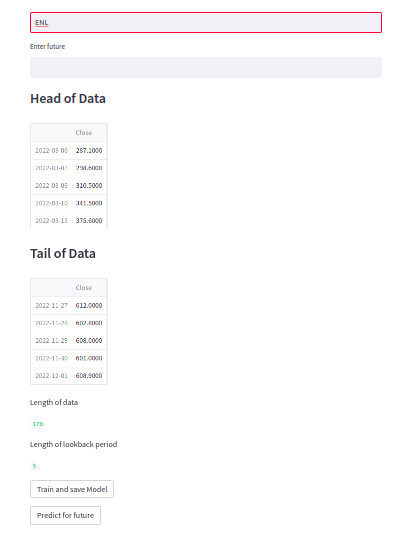

After entering the stock symbol

Entering the value of future

Training

Comparison

Final Prediction

Adding the files to git repository

git add .

git commit -m "Basic Files"

git push -u origin mainAdding requirements file

python3 -m pip freeze>requirements.txt

#pushing it to git using code in above section

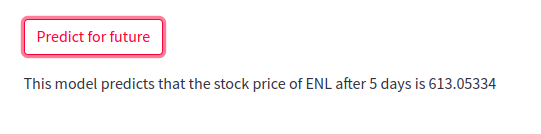

Hosting app on streamlit cloud

Sign up with your github account

Signin

On right top you will see New App option

Click It

You will see following

Paste your github repository.

Paste Your branch

The streamlit file

Paste Deploy

It will take some time.

Application On Streamlit