Python

Basic Neural Network in PyTorch

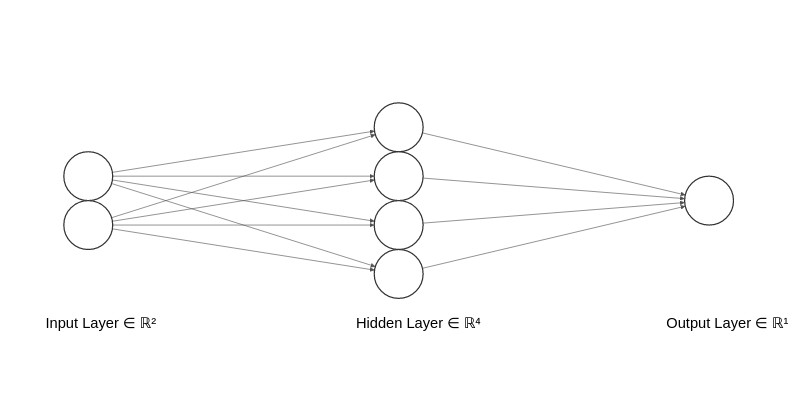

Making a simple neural network with a single hidden layer and four neurons in hidden layer.

Schema of our Neural Network

Import necessary libraries

import torch

import torch.nn as nnInputs and outputs

#our input contains four items each with two features

x = [[1,2],

[3,4],

[5,6],

[7,8]]

#our output contains target value for each four inputs

y = [[3],[7],[11],[15]]Converting basic python array to PyTorch tensors

x = torch.tensor(x).float()

y = torch.tensor(y).float()Code to use GPU if available

device = 'cuda' if torch.cuda.is_available() else 'cpu' Putting our variable to GPU

x = x.to(device)

y = y.to(device)Defining neural network

class MyNeuralNet(nn.Module):

def __init__(self):

super().__init__()

#define hidden layer

self.input_to_hidden_layer = nn.Linear(2,4)

#define activation function of hidden layer

self.hidden_layer_activation = nn.ReLU()

#define output layer

self.hidden_to_output_layer = nn.Linear(4,1)

#Define feed forward network based on above definitions

def forward(self,x):

x = self.input_to_hidden_layer(x)

x = self.hidden_layer_activation(x)

x = self.hidden_to_output_layer(x)

return x

instantiate neural network

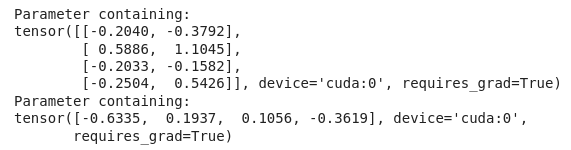

mynet = MyNeuralNet().to(device)Weights of different layers

#weights of hidden layer neurons

print(mynet.input_to_hidden_layer.weight)

print(mynet.input_to_hidden_layer.bias)

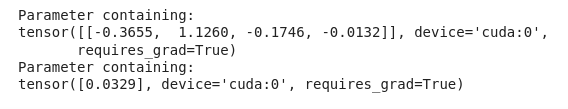

#weight of output layer

print(mynet.hidden_to_output_layer.weight)

print(mynet.hidden_to_output_layer.bias)

Loss function

#Loss function

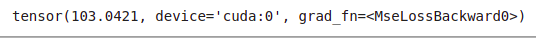

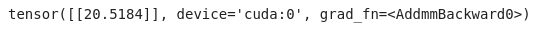

loss_func = nn.MSELoss()Only one forward propagation in this neural net

_y = mynet(x)

loss_value = loss_func(_y,y)

print(loss_value)

Defining the optimizer

#setting Stochastic gradient descent as an optimizer

from torch.optim import SGD

opt = SGD(mynet.parameters(),lr=0.001)Training the network for 50 epochs

loss_history = []

for _ in range(50):

opt.zero_grad()

loss_value = loss_func(mynet(x),y)

loss_value.backward()

opt.step()

loss_value = loss_value.cpu().data.numpy()

#loss_value = loss_value.numpy()

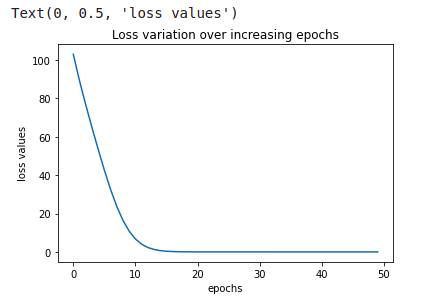

loss_history.append(loss_value)Plotting the loss

import matplotlib.pyplot as plt

%matplotlib inline

plt.plot(loss_history)

plt.title('Loss variation over increasing epochs')

plt.xlabel('epochs')

plt.ylabel('loss values')

Feeding the new value to the neural network

val_x = [[10,11]]

val_x = torch.tensor(val_x).float().to(device)

mynet(val_x)

pontu

0

Tags :