Breast Cancer Classification with Azure ML & MLflow: End-to-End ML Tutorial (2024)

Learn how to build, compare, and deploy machine learning models for breast cancer classification using Azure ML and MLflow. This step-by-step tutorial covers data preprocessing, model training, performance tracking, and deployment to a scalable API—perfect for data scientists and ML engineers.

Setup

Learn basics of Azure from How to Train, Register, and Deploy a Model in Azure ML and basic of MLflow from Mastering MLflow Tracking for SVM Models on the Digits Dataset. After creating Azure account, creating and launching Azure AI Machine Learning studio, click on top right of your ML studio home page and download a config file.

Launch a terminal, create a directory, create a virtual environment. After activating the environment run following commands.

pip install azureml-sdk mlflow scikit-learn pandas matplotlib requests- azureml-sdk: Azure Machine Learning Python SDK for model deployment

- mlflow: Experiment tracking, model logging, and deployment

- scikit-learn: Machine learning models (Random Forest, SVM, etc.)

- pandas: Data manipulation (loading/testing datasets)

- matplotlib: Performance comparison graphs

- requests: API calls to test deployed model

Create a Azure_MLFlow.ipynb file and put the config file in the same folder.

Imports

from azureml.core import Workspace

from azureml.core.model import InferenceConfig,Model

from azureml.core.environment import Environment

from azureml.core.webservice import AciWebservice,Webservice

import mlflow

import mlflow.sklearn

from mlflow.tracking import MlflowClient

from mlflow.models.signature import infer_signature

from sklearn.model_selection import train_test_split

from sklearn.svm import SVC

from sklearn.ensemble import RandomForestClassifier,GradientBoostingClassifier

from sklearn.metrics import accuracy_score,f1_score

from sklearn.datasets import load_breast_cancer

from sklearn.metrics import accuracy_score, f1_score

import pandas as pd

import matplotlib.pyplot as plt

import numpy as np

import requests

import jsonConnect and track

ws = Workspace.from_config()- Connects to your Azure Machine Learning workspace using a local

config.jsonfile. - This file contains authentication details (subscription ID, resource group, workspace name).

mlflow.set_tracking_uri(ws.get_mlflow_tracking_uri())- Configures MLflow to log experiments/metrics directly to your Azure ML workspace.

- Azure ML provides a built-in MLflow-compatible tracking server.

Load Data from sklearn

# Load dataset

X, y = load_breast_cancer(return_X_y=True)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)Preparation For the Model Training

# Dictionary to store model performances

model_performance = {}# List of algorithms to evaluate

algorithms = {

"Random Forest": RandomForestClassifier(n_estimators=100, random_state=42),

"Gradient Boosting": GradientBoostingClassifier(random_state=42),

"SVM": SVC(probability=True, random_state=42)

}# Track metrics

accuracy_scores = []

f1_scores = []Train, Track and Register

This code trains multiple ML models (Random Forest, Gradient Boosting, SVM) on breast cancer data, tracks performance metrics (accuracy/F1) using MLflow, visualizes results, and registers the best model to Azure ML. It uses nested runs for organized experiment tracking and deploys the winning model with input signatures for production readiness.

- Experiment Setup:

- Starts a parent MLflow run to group all model trials.

- Logs dataset metadata as parameters for reproducibility

with mlflow.start_run() as run:

mlflow.log_params({"dataset": "breast_cancer", "test_size": 0.2, "random_state": 42})- Model Training Loop:

- Trains each algorithm in nested child runs (organized under the parent).

- Uses scikit-learn’s standard

fit()/predict()workflow.

for algo_name, model in algorithms.items():

with mlflow.start_run(nested=True, run_name=algo_name):

model.fit(X_train, y_train)

predictions = model.predict(X_test)- Metric Logging & Model Registration

- Logs performance metrics (accuracy/F1) and hyperparameters.

- Saves each model to MLflow with:

- Input example: Sample data for API testing

- Signature: Expected input/output schema

- Registration: Names models like

cancer_random_forest

mlflow.log_metric("accuracy", accuracy)

mlflow.log_metric("f1_score", f1)

mlflow.log_params(model.get_params())

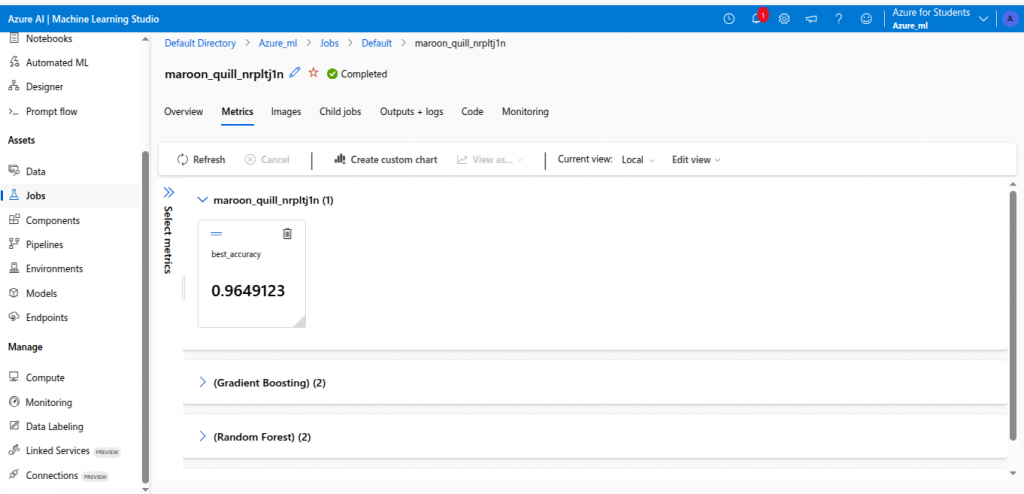

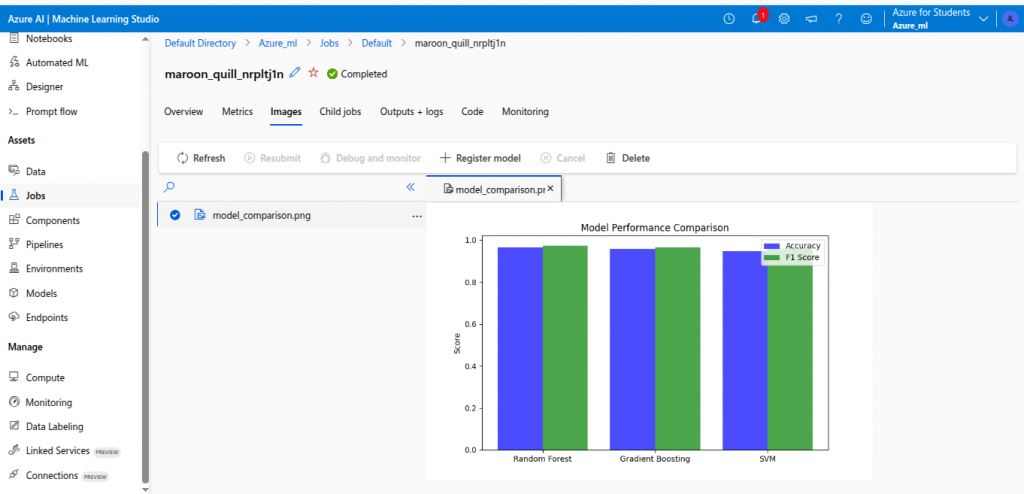

mlflow.sklearn.log_model(..., registered_model_name="cancer_"+algo_name)- Visualization & Best Model Selection

- Generates a bar chart comparing models.

- Saves the plot to MLflow artifacts.

plt.bar(...) # Accuracy vs. F1 comparison chart

mlflow.log_figure(plt.gcf(), "model_comparison.png")- Best Model Promotion

- Tracks the highest accuracy model.

- Registers the winner to Azure ML’s model registry for deployment.

if accuracy > best_accuracy:

best_model_uri = model_uri.model_uri

mlflow.register_model(best_model_uri, "best_cancer_model")- Cleanup & Output

- Prints the parent run ID for reference in Azure ML Studio.

print(f"Parent Run ID: {run.info.run_id}")Complete code for Train, Track and Register

# Start MLflow run

with mlflow.start_run() as run:

mlflow.log_params({"dataset": "breast_cancer", "test_size": 0.2, "random_state": 42})

model_performance = {}

best_model_uri = None

best_model_name = None

best_accuracy = 0.0

accuracy_scores = []

f1_scores = []

# Train and evaluate each algorithm

for algo_name, model in algorithms.items():

print(f"\nTraining {algo_name}...")

try:

with mlflow.start_run(nested=True, run_name=algo_name) as nested_run:

model.fit(X_train, y_train)

predictions = model.predict(X_test)

accuracy = accuracy_score(y_test, predictions)

f1 = f1_score(y_test, predictions)

mlflow.log_metric("accuracy", accuracy)

mlflow.log_metric("f1_score", f1)

mlflow.log_params(model.get_params())

mlflow.set_tag("algorithm", algo_name)

artifact_path = f"{algo_name.lower().replace(' ', '_')}_model"

registered_model_name = f"cancer_{algo_name.lower().replace(' ', '_')}"

# Define Input Example & Signature

input_example = X_train[:1] # Example input

signature = infer_signature(X_train, model.predict(X_train)) # Model signature

model_uri = mlflow.sklearn.log_model(

sk_model=model,

artifact_path=artifact_path,

registered_model_name=registered_model_name,

input_example=input_example,

signature=signature

)

model_performance[algo_name] = accuracy

print(f"{algo_name} - Accuracy: {accuracy:.4f}")

# Store metrics

accuracy_scores.append(accuracy)

f1_scores.append(f1)

if accuracy > best_accuracy:

best_accuracy = accuracy

best_model_uri = model_uri.model_uri

best_model_name = algo_name

except Exception as e:

print(f"⚠️ Error in {algo_name} run: {e}")

# Create comparison graph

x_labels = list(algorithms.keys())

x = np.arange(len(x_labels))

plt.figure(figsize=(8, 5))

plt.bar(x - 0.2, accuracy_scores, width=0.4, label="Accuracy", color='blue', alpha=0.7)

plt.bar(x + 0.2, f1_scores, width=0.4, label="F1 Score", color='green', alpha=0.7)

plt.xticks(x, x_labels)

plt.ylabel("Score")

plt.title("Model Performance Comparison")

plt.legend()

# Log the figure in MLflow

mlflow.log_figure(plt.gcf(), "model_comparison.png")

# Show plot

plt.show()

# Log best model details

if best_model_uri:

print("\n*************************")

print(f"Best Model: {best_model_name} with Accuracy: {best_accuracy:.4f}")

mlflow.log_metric("best_accuracy", best_accuracy)

mlflow.log_param("best_algorithm", best_model_name)

# Register best model as 'best_cancer_model'

try:

mlflow.register_model(model_uri=best_model_uri, name="best_cancer_model")

print(f"\n✅ Successfully registered best model: {best_model_name} as 'best_cancer_model'")

except Exception as e:

print(f"⚠️ Failed to register best model: {e}")

else:

print("⚠️ No valid models were trained.")

print(f"\nParent Run ID: {run.info.run_id}")

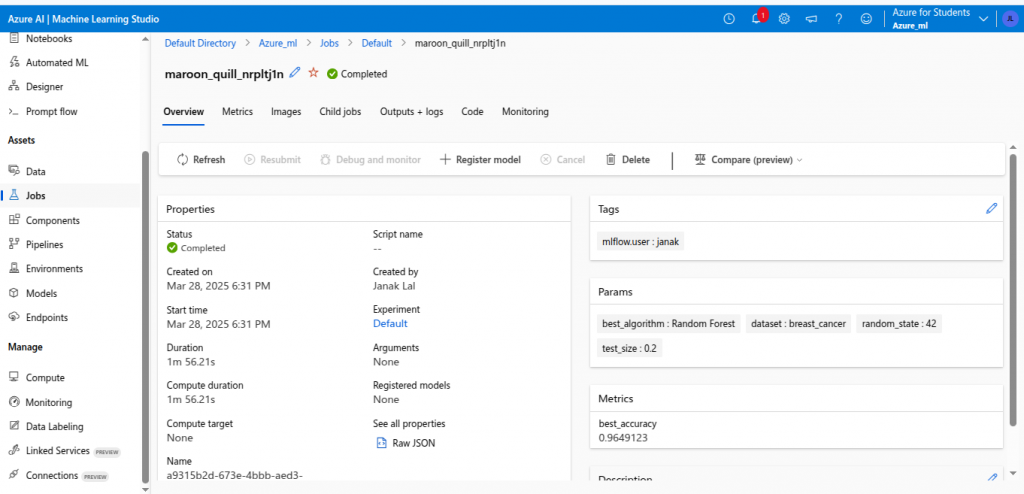

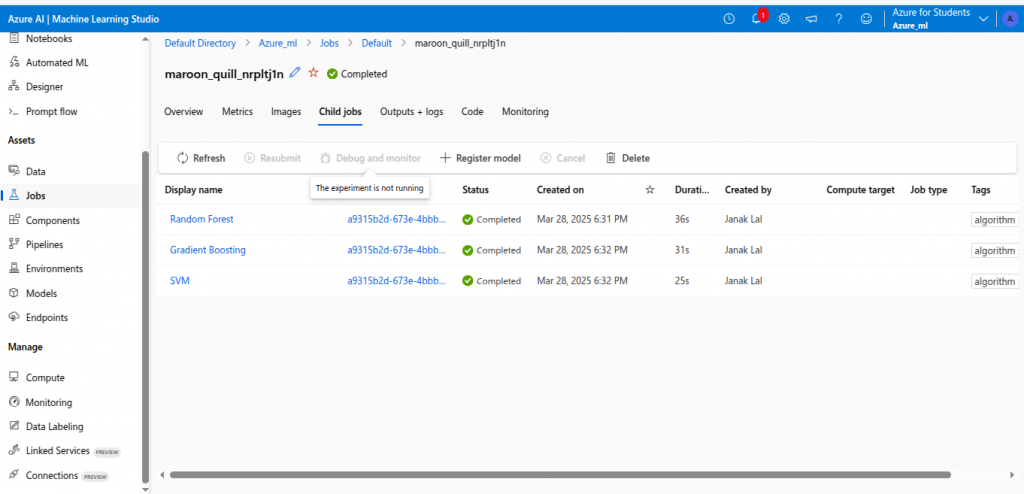

Look at the job just completed in Azure

Go to jobs. Click the latest one.

Deploy the model as endpoint in Azure

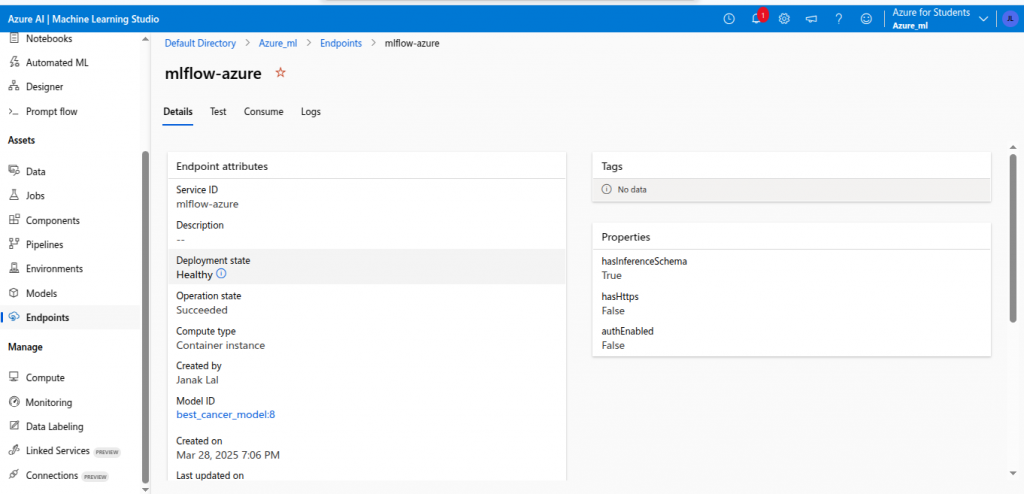

This code deploys the registered best_cancer_model to Azure as a REST API using Azure Container Instances (ACI). It configures a custom environment with MLflow/scikit-learn and specifies an entry script (score_azure_mlflow.py) for inference. The deployment runs with 1 CPU core and 1GB RAM.

- Environment Setup

- Names the environment

azure_mlflow. - Installs MLflow (for model loading) and scikit-learn (for inference).

- Ensures consistency between training and deployment environments.

- Names the environment

env = Environment(name="azure_mlflow")

env.python.conda_dependencies.add_pip_package("mlflow")

env.python.conda_dependencies.add_pip_package("scikit-learn")- Inference Configuration

entry_script: The Python script (score_azure_mlflow.py) that loads the model and handles input/output (e.g., pre/post-processing).environment: Links to the custom environment created earlier.

inference_config = InferenceConfig(entry_script="score_azure_mlflow.py", environment=env)- Model Retrieval

- Fetches the registered model from Azure ML’s model registry.

- Assumes

best_cancer_modelwas previously registered viamlflow.register_model().

model = Model(ws, "best_cancer_model")- Deployment Configuration

- Sets compute resources for the ACI container.

deployment_config = AciWebservice.deploy_configuration(cpu_cores=1, memory_gb=1)- Deployment Execution

- Packages the model, environment, and entry script into a Docker container.

- Deploys to Azure Container Instances (ACI) (serverless option).

show_output=Trueprints real-time logs during deployment.- Returns a

Webserviceobject with methods like.scoring_uri(for API calls).

service = Model.deploy(ws, "mlflow-azure", [model], inference_config, deployment_config)

service.wait_for_deployment(show_output=True)This might take a while to complete.

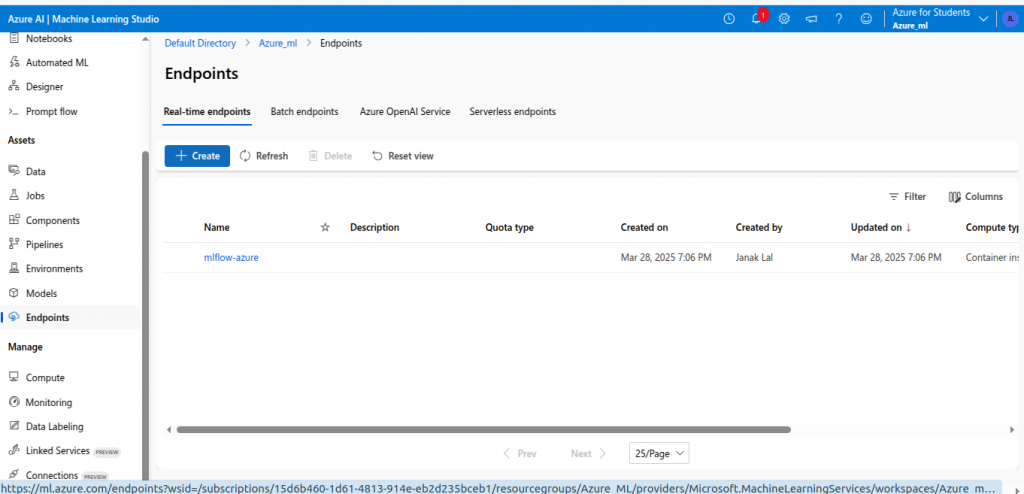

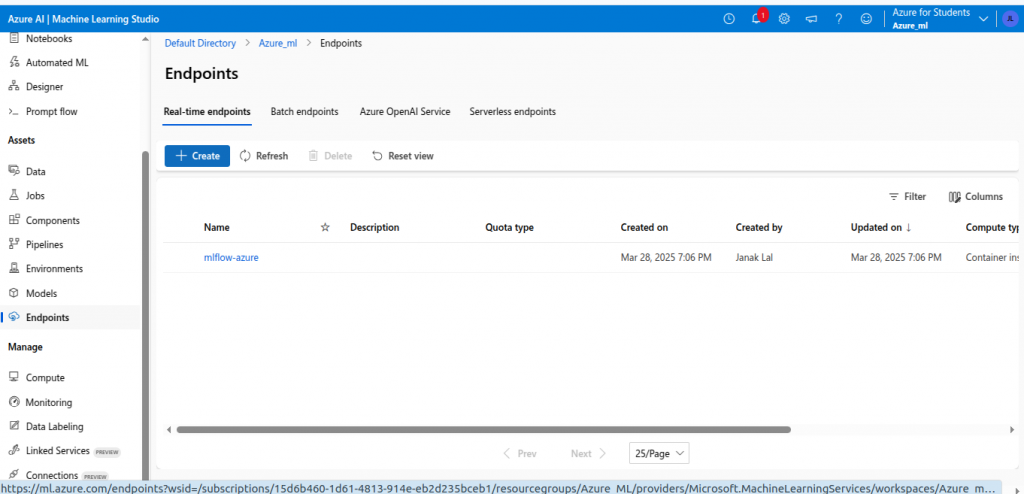

After completion you can see you endpoint in Azureml endpoints.

Score file

Create a score_azure_mlflow.py

Following code defines an Azure ML inference script (score_azure_mlflow.py) that loads the registered best_cancer_model using MLflow and processes input data to return predictions. The init() function loads the model at startup, while run() handles JSON input, runs predictions, and formats the output.

init()- Loads the model once when the Azure ML container starts.

Model.get_model_path(): Fetches the latest version ofbest_cancer_modelfrom Azure ML’s registry.mlflow.pyfunc.load_model(): Loads the model as a generic Python function (supports any MLflow-flavored model).- Uses

global modelto persist the loaded model across requests.

def init():

global model

model_dir = Model.get_model_path("best_cancer_model")

model = mlflow.pyfunc.load_model(model_dir)run(data)- Processes incoming HTTP requests and returns model predictions.

- Parses JSON input (e.g.,

{"data": [[1.0, 2.0, ...]]}) and extracts thedatafield. - Converts input to a NumPy array (required by scikit-learn models).

- Calls

model.predict()and formats results as a JSON-serializable list. - Returns errors (e.g., invalid input) with a descriptive message.

def run(data):

try:

input_data = json.loads(data)["data"]

predictions = model.predict(np.array(input_data))

return {"predictions": predictions.tolist()}

except Exception as e:

return {"error": str(e)}Testing the deployment

Following code sends a test request to the deployed Azure ML model API, using the last sample from the test dataset (X_test[-1]). It formats the input as JSON, sets the appropriate headers, and prints the model’s prediction response.

- Prepare Input Data

- Takes the last row of the test set (

X_test[-1]). - Converts the NumPy array to a Python list (

.tolist()). - Wraps it in a dictionary with key

"data"(matches therun()function’s expected format). - Serializes to JSON string (

json.dumps()).

- Takes the last row of the test set (

input_data = json.dumps({"data": [X_test[-1].tolist()]})- Set HTTP Headers

- Tells the server the request contains JSON data.

- Without this header, Azure ML may reject the request.

headers = {"Content-Type": "application/json"}- Send Request & Print Response

- Calls the deployed model and displays predictions.

service.scoring_uri: The endpoint URL generated during deployment.requests.post: Sends the JSON data to the API.response.json(): Parses the API’s JSON response (e.g.,{"predictions": [0]}).

response = requests.post(service.scoring_uri, data=input_data, headers=headers)

print(response.json())Delete Endpoint If not in use

from azureml.core.webservice import Webservice

# Get the deployed service

service = Webservice(workspace=ws, name="mlflow-azure")

# Delete the service

service.delete()

print("Service deleted successfully.")