Build an Image Classifier on Azure: VGG16, Hymenoptera Data, and Flask Deployment

Learn how to build an end-to-end machine learning solution on Azure: train a VGG16 model on the Hymenoptera dataset for insect image classification, deploy it as an API endpoint, and create a Flask web application to consume the model. This tutorial covers data handling, model training, registration, deployment, and web integration—all within the Azure ecosystem.

Get the Data, Train and Register the Model

Launch the studio and open a notebook. Check basic setup needed for this tutorial here:

Create a folder Hymenoptera and within folder create a file named hymenoptera.ipynb. Open the .ipynb file, select the compute instance that you created in previous tutorial and the kernel Python 3.8 – AzureML.

Imports

import torch

import torchvision

import kagglehub

import torch.nn as nn

import torch.optim as optim

from torchvision import datasets, models, transforms

import osGet the data from Kaggle

We will download the data from Kaggle

# Download latest version

path = kagglehub.dataset_download("ajayrana/hymenoptera-data")

print("Path to dataset files:", path)Finding the right folder

from pathlib import Path

def get_folders(path):

# Convert the input path to a Path object

p = Path(path)

# Check if the path exists; if not, raise an error

if not p.exists():

raise FileNotFoundError(f"The path {path} does not exist.")

# Check if the path is a directory; if not, raise an error

if not p.is_dir():

raise NotADirectoryError(f"The path {path} is not a directory.")

# Return a sorted list of folder names in the path

return sorted(item.name for item in p.iterdir() if item.is_dir())get_folders('/home/azureuser/.cache/kagglehub/datasets/ajayrana/hymenoptera-data/versions/1')dataset_path = path+'/hymenoptera_data'Define train and validation dir

# Define train and validation directories

train_dir = os.path.join(dataset_path, "train")

val_dir = os.path.join(dataset_path, "val")

print("Train Directory:", train_dir)

print("Validation Directory:", val_dir)

# Check if the directories exist

assert os.path.isdir(train_dir), "Train directory not found!"

assert os.path.isdir(val_dir), "Validation directory not found!"

print("Classes in train folder:", os.listdir(train_dir))

print("Classes in val folder:", os.listdir(val_dir))Data Transformations

data_transforms = {

"train": transforms.Compose([

transforms.Resize((224, 224)),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

]),

"val": transforms.Compose([

transforms.Resize((224, 224)),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

]),

}

Data Creation

image_datasets = {

"train": datasets.ImageFolder(train_dir, data_transforms["train"]),

"val": datasets.ImageFolder(val_dir, data_transforms["val"]),

}Data Loading

dataloaders = {

"train": torch.utils.data.DataLoader(image_datasets["train"], batch_size=32, shuffle=True),

"val": torch.utils.data.DataLoader(image_datasets["val"], batch_size=32, shuffle=False),

}Class name

class_names = image_datasets["train"].classes

#The class names are extracted from the training dataset for later use (e.g., identifying labels).

Device Selection

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")Model Loading

# Load VGG16 model

model = models.vgg16(pretrained=True)Classifier Modification

# Modify the classifier

num_ftrs = model.classifier[6].in_features

model.classifier[6] = nn.Linear(num_ftrs, len(class_names))Device Assignment

model = model.to(device)Loss and optimizer setup

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.classifier.parameters(), lr=0.001)Training Loop

num_epochs = 5

for epoch in range(num_epochs):

print(f"Epoch {epoch+1}/{num_epochs}")

for phase in ["train", "val"]:

if phase == "train":

model.train()

else:

model.eval()

running_loss, correct = 0.0, 0

for inputs, labels in dataloaders[phase]:

inputs, labels = inputs.to(device), labels.to(device)

optimizer.zero_grad()

with torch.set_grad_enabled(phase == "train"):

outputs = model(inputs)

loss = criterion(outputs, labels)

if phase == "train":

loss.backward()

optimizer.step()

running_loss += loss.item() * inputs.size(0)

correct += (outputs.argmax(1) == labels).sum().item()

epoch_loss = running_loss / len(image_datasets[phase])

epoch_acc = correct / len(image_datasets[phase])

print(f"{phase} Loss: {epoch_loss:.4f}, Acc: {epoch_acc:.4f}")

print("Training complete.")

Save the model

model_path = "vgg16_hymenoptera.pth"

torch.save(model.state_dict(), model_path)

print("Model saved.")

Register the model in the current workspace

from azureml.core import Workspace

from azureml.core.model import Model

ws = Workspace.from_config()

Model.register(

workspace=ws,

model_path=model_path,

model_name="vgg16_hymenoptera",

description="Fine-tuned VGG16 model for hymenoptera classification",

)

print("Model registered in Azure ML.")Endpoint Creation

Create a file deploy_model.ipynb

Score.py

This script (score.py) defines a model inference pipeline for deployment on Azure ML. It includes two main functions: init() to load a pre-trained VGG16 model fine-tuned for Hymenoptera classification (ants vs. bees), and run() to process image input and return predictions. The script supports JSON input with image data, preprocesses it, performs inference, and returns the predicted class label.

%%writefile score.py

import json

import torch

import torch.nn as nn

import torchvision.transforms as transforms

from torchvision import models

from PIL import Image

import os

import io

# Define class labels

CLASS_NAMES = ["ant", "bee"]

# Load model at initialization

def init():

global model

model_path = os.path.join(os.getenv("AZUREML_MODEL_DIR", ""), "vgg16_hymenoptera.pth")

print(f"AZUREML_MODEL_DIR: {os.getenv('AZUREML_MODEL_DIR')}")

print(f"Model path: {model_path}")

if not os.path.exists(model_path):

print("Error: Model file not found!")

return

model = models.vgg16(pretrained=False)

model.classifier[6] = nn.Linear(4096, 2) # Adjust for 2 classes

model.load_state_dict(torch.load(model_path, map_location=torch.device("cpu")))

model.eval()

print("Model loaded successfully.")

# Inference function

def run(raw_data):

try:

print("Received data for inference.")

# Parse JSON input

data = json.loads(raw_data)

image_bytes = data.get("image") # Expect base64 or binary image data

if image_bytes is None:

return json.dumps({"error": "No image found in request."})

print("Image data received.")

# Convert image bytes to PIL Image

image = Image.open(io.BytesIO(bytearray(image_bytes))).convert("RGB")

# Preprocess image

transform = transforms.Compose([

transforms.Resize((224, 224)),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),

])

img_tensor = transform(image).unsqueeze(0) # Add batch dimension

# Perform inference

with torch.no_grad():

outputs = model(img_tensor)

_, predicted = torch.max(outputs, 1)

# Return human-readable class label

predicted_label = CLASS_NAMES[predicted.item()]

print(f"Predicted label: {predicted_label}")

return json.dumps({"prediction": predicted_label})

except Exception as e:

print(f"Error during inference: {e}")

return json.dumps({"error": str(e)})

# Run test when executed as a script

if __name__ == "__main__":

print("Initializing model...")

init()

# Test inference with a sample image

test_image_path = "1.png" # Replace with an actual image file for testing

if os.path.exists(test_image_path):

with open(test_image_path, "rb") as f:

image_bytes = f.read()

print("Running inference test...")

response = run(json.dumps({"image": list(image_bytes)}))

print("Inference result:", response)

else:

print(f"Test image '{test_image_path}' not found. Please add a test image to verify inference.")

- Initialization (init): Loads the VGG16 model from a file (vgg16_hymenoptera.pth) in the Azure ML model directory, adjusts the classifier for two classes, and sets it to evaluation mode.

- Inference (run): Accepts JSON input with image bytes, preprocesses the image (resize, normalize), runs it through the model, and returns the predicted label (“ant” or “bee”) or an error message.

- Testing: Includes a test block to verify functionality with a local image file (1.png) when run as a script.

- Environment: Uses PyTorch, PIL, and standard libraries, designed for Azure ML endpoint deployment.

Put image of bee or an ant in same folder, (in above code its 1.png)and run the following command.

environment.yml

This environment.yml file defines a Conda environment named pytorch-env for a PyTorch-based Azure ML deployment. It specifies Python 3.8 and key dependencies like PyTorch, TorchVision, and Azure ML libraries, along with additional packages required for model inference, API creation, and deployment.

%%writefile environment.yml

name: pytorch-env

dependencies:

- python=3.8

- joblib

- pip

- pip:

- torch==2.1.0

- torchvision==0.16.0

- pillow

- azureml-sdk

- azure-ai-ml # Required for SDK v2

- azureml-defaults # Required for deployment

- azureml-inference-server-http # Required for scoring

- fastapi # Required for API

- uvicorn # Required for API server

- Base Environment: Uses Python 3.8 as the runtime.

- Core Dependencies: Includes joblib (via Conda) and PyTorch ecosystem packages (torch==2.1.0, torchvision==0.16.0, pillow) via pip for model training/inference and image processing.

- Azure ML Support: Installs azureml-sdk, azure-ai-ml (SDK v2), azureml-defaults, and azureml-inference-server-http for model registration, deployment, and scoring on Azure ML.

- API Requirements: Adds fastapi and uvicorn to enable a lightweight API server for the deployed endpoint.

deploy.py

This deploy.py script deploys a registered VGG16 model (vgg16_hymenoptera) as a web service on Azure Container Instances (ACI) using Azure ML. It loads the workspace, retrieves the model, sets up the environment from environment.yml, configures the inference script (score.py), and deploys the service, then prints the deployment status and endpoint URL.

%%writefile deploy.py

from azureml.core import Workspace, Model, Environment

from azureml.core.webservice import AciWebservice

from azureml.core.model import InferenceConfig

# Load the Azure ML workspace

ws = Workspace.from_config()

# Get registered model

model = Model(ws, name="vgg16_hymenoptera")

# Define environment

env = Environment.from_conda_specification(name="pytorch-env", file_path="environment.yml")

# Define inference config

inference_config = InferenceConfig(entry_script="score.py", environment=env)

# Define deployment config for Azure Container Instance (ACI)

deployment_config = AciWebservice.deploy_configuration(cpu_cores=1, memory_gb=2)

# Deploy model as a web service

service = Model.deploy(

workspace=ws,

name="vgg16-serviceee",

models=[model],

inference_config=inference_config,

deployment_config=deployment_config

)

service.wait_for_deployment(show_output=True)

# Print deployment info

print(f"Service State: {service.state}")

print(f"Scoring URI: {service.scoring_uri}")

- Workspace & Model: Connects to an Azure ML workspace and retrieves the registered model “vgg16_hymenoptera”.

- Environment: Uses the pytorch-env Conda environment defined in environment.yml.

- Inference Config: Links the score.py script with the environment for scoring.

- Deployment Config: Specifies ACI with 1 CPU core and 2 GB of memory.

- Service Deployment: Deploys the model as “vgg16-serviceee”, waits for completion, and outputs the service state and scoring URI.

Run the deploy.py

!deploy.pyIt will take some time to deploy.

After successful deployment you will see.

Running

2025-03-24 07:09:26+00:00 Registering the environment.

2025-03-24 07:09:32+00:00 Building image..

2025-03-24 07:23:32+00:00 Generating deployment configuration..

2025-03-24 07:23:34+00:00 Submitting deployment to compute..

2025-03-24 07:23:40+00:00 Checking the status of deployment vgg16-service..

2025-03-24 07:26:41+00:00 Checking the status of inference endpoint vgg16-service.

Succeeded

ACI service creation operation finished, operation "Succeeded"

Service State: Healthy

Scoring URI: http://26f07f70-646a-40e6-bb4a-8419dcc7efc4.eastus2.azurecontainer.io/scoreFlask Web Application

In you local machine create a folder named FLASK_AZURE with following files.

app.py

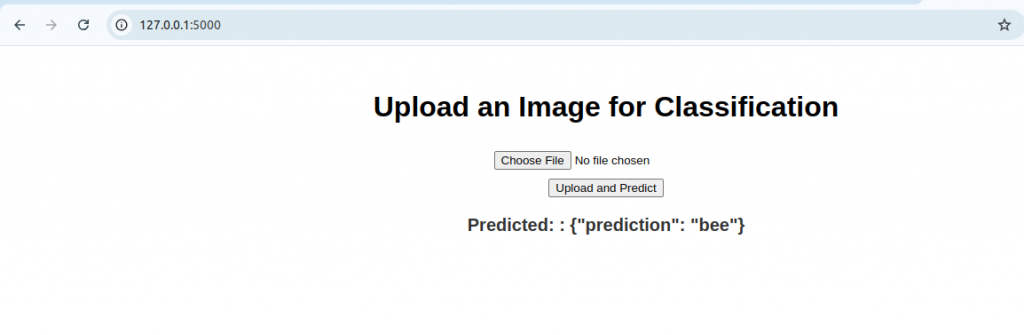

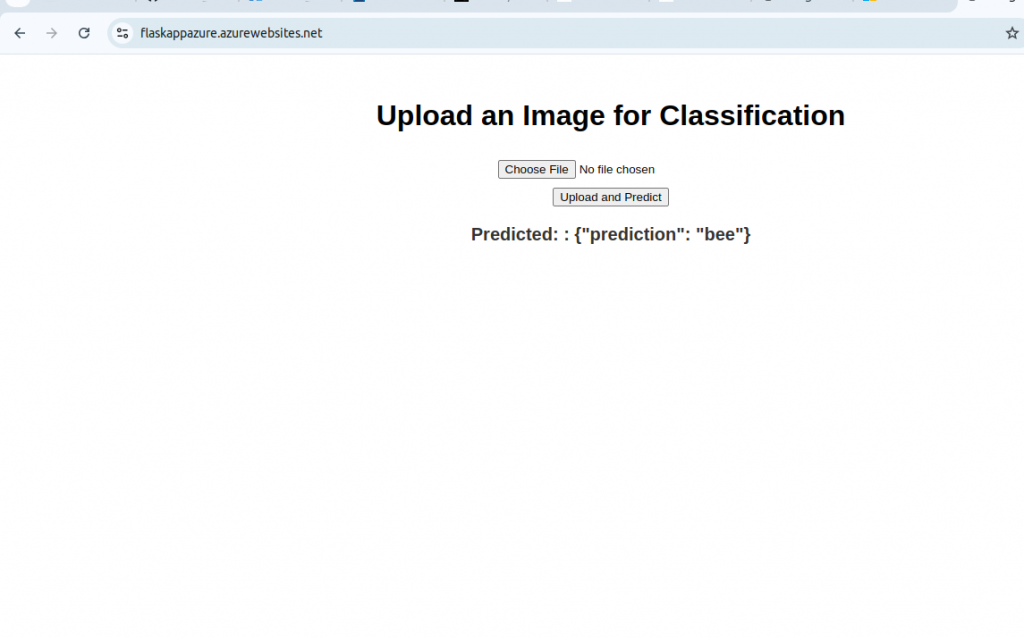

This Flask application creates a web interface to upload images and get predictions from an Azure ML endpoint hosting a VGG16 model. It handles file uploads, converts images to bytes, sends them to the endpoint, and displays the predicted class (e.g., “ant” or “bee”) on an HTML page.

from flask import Flask, request, render_template

import requests

import json

from PIL import Image

import io

app = Flask(__name__)

# Azure ML Endpoint URL

AZURE_ENDPOINT = "http://c6b3572a-9125-41ed-b730-28ffe7be14f6.eastus2.azurecontainer.io/score" # Replace with your actual endpoint URL

@app.route('/', methods=['GET', 'POST'])

def index():

if request.method == 'POST':

if 'file' not in request.files:

return render_template('index.html', prediction="No file uploaded.")

file = request.files['file']

if file.filename == '':

return render_template('index.html', prediction="No file selected.")

image = Image.open(file.stream).convert("RGB")

img_bytes = io.BytesIO()

image.save(img_bytes, format='JPEG')

img_bytes = img_bytes.getvalue()

# Send image to Azure ML endpoint

headers = {'Content-Type': 'application/json'}

payload = json.dumps({"image": list(img_bytes)})

response = requests.post(AZURE_ENDPOINT, data=payload, headers=headers)

try:

response_json = response.json() # Ensure we parse JSON safely

if isinstance(response_json, dict): # Check if it's a dictionary

prediction = response_json.get("prediction", "Unknown")

else:

prediction = f": {response_json}"

except requests.exceptions.JSONDecodeError:

prediction = f"Invalid JSON response: {response.text}"

return render_template('index.html', prediction=f"Predicted: {prediction}")

return render_template('index.html', prediction=None)

if __name__ == '__main__':

app.run(debug=True)- Flask Setup: Defines a Flask app with a single route (/) for both GET and POST requests.

- Image Processing: On POST, it checks for an uploaded file, opens it as a PIL Image, converts it to JPEG bytes, and prepares it as JSON payload.

- Endpoint Call: Sends the image bytes to the Azure ML scoring URI (AZURE_ENDPOINT) via a POST request and retrieves the prediction.

- Response Handling: Parses the JSON response safely, extracts the prediction, and renders it on index.html; handles errors gracefully.

- Run: Launches the app in debug mode for local testing.

- Replace the AZURE_ENDPOINT URL with the actual scoring URI from your deployed Azure ML service (e.g., from deploy.py output).

- Requires an index.html template (not shown) with a file upload form and a placeholder for the prediction result.

index.html

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>Image Classification</title>

<style>

body {

font-family: Arial, sans-serif;

text-align: center;

margin: 50px;

}

form {

margin-top: 20px;

}

input[type="file"] {

margin: 10px 0;

}

.result {

margin-top: 20px;

font-size: 20px;

font-weight: bold;

color: #333;

}

</style>

</head>

<body>

<h1>Upload an Image for Classification</h1>

<form action="/" method="post" enctype="multipart/form-data">

<input type="file" name="file" accept="image/*" required>

<br>

<button type="submit">Upload and Predict</button>

</form>

{% if prediction %}

<div class="result">{{ prediction }}</div>

{% endif %}

</body>

</html>

Now, run the following command.

python app.pyYou might need to install few python libraries.

The output will look something like:

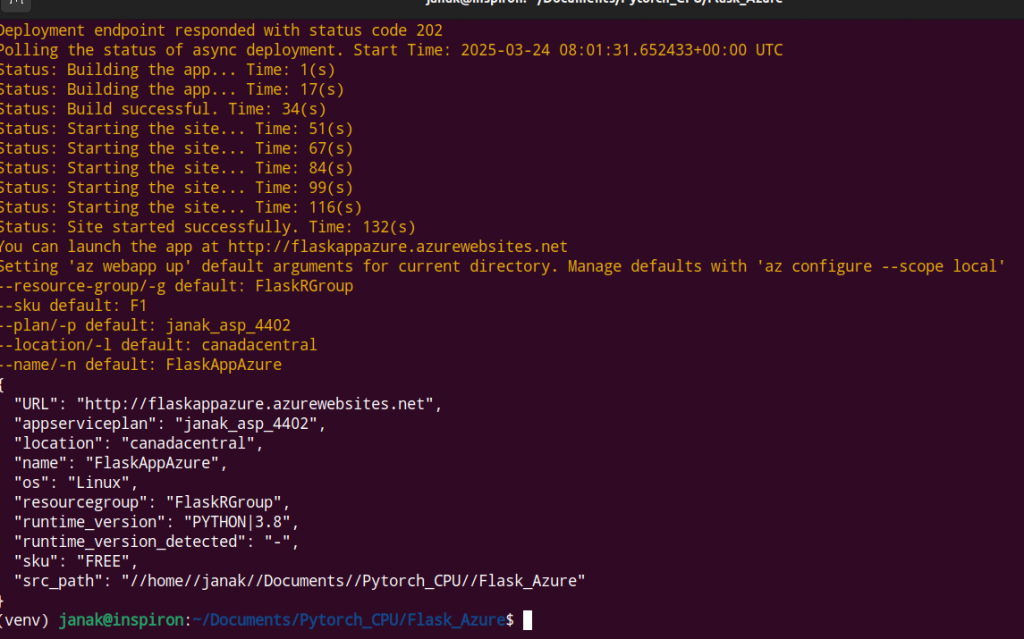

Deploying Flask Application on Azure

Create a requirements.txt in same folder as app.py.

Flask

gunicorn

requests

pillowLogin to azure

az loginCreate a resource group

az group create --name FlaskRGroup --location eastusCreate an Azure Service plan

az appservice plan create --name FlaskPlan --resource-group FlaskRGroup --sku F1Direct Deployment

az webapp up --name FlaskAppAzure --resource-group FlaskRGroup --runtime "PYTHON:3.8"Your terminal will wokk something like

Go to the url:

You might want to stop the server or delete it completely if not in use.

az webapp stop --name FlaskAppAzure --resource-group FlaskRGroupaz webapp start --name FlaskAppAzure --resource-group FlaskRGroupaz webapp delete --name FlaskAppAzure --resource-group FlaskRGroupAlso you want to kill the endpoint and compute instance when not in use.