Mastering MLflow Tracking for SVM Models on the Digits Dataset

Machine learning experimentation involves not only building and tuning models but also meticulously tracking and comparing different runs to identify the best-performing model. MLflow Tracking is a powerful tool that simplifies this process by providing a centralized platform to log parameters, metrics, and artifacts during model development. This introduction focuses on using MLflow Tracking to experiment with a Support Vector Machine (SVM) classifier on the Digits dataset, a classic dataset in machine learning for multi-class classification.

The Digits dataset consists of 8×8 pixel images of handwritten digits (0-9), with each pixel represented as a feature. The goal is to classify these images into their respective digit labels. In this experiment, we use an SVM classifier and explore different hyperparameters, specifically the regularization parameter C and the kernel coefficient gamma. The hyperparameter combinations tested are:

C_values = [0.1, 1, 10]gamma_values = [0.01, 0.1, 1]

For each combination of hyperparameters, the following steps are performed:

- Train an SVM model on the Digits dataset.

- Log the hyperparameters, metrics (accuracy and F1 score), and artifacts (confusion matrix) using MLflow Tracking.

- Compare the F1 scores and accuracy across all experiments to determine the best-performing model.

- Save the best model (based on F1 score) for deployment.

The confusion matrix is saved for each experiment to provide insights into the model’s performance across different classes. The F1 score, which balances precision and recall, is used as the primary metric to evaluate and select the best model. Once the best model is identified, it is saved and deployed locally for inference.

This experiment demonstrates how MLflow Tracking can streamline the process of hyperparameter tuning, metric comparison, and model selection, making it an essential tool for machine learning practitioners. By leveraging MLflow, we ensure reproducibility, traceability, and efficiency in our machine learning workflows.

Setup

Create a directory

mkdir mlflow_Blog && cd mlflow_BlogCreate a virtual environment and activate it

python3 -m venv venv

source venv/bin/activateInstall mlflow and other stuffs

pip install mlflow

pip install seabornThe Code

Imports

import mlflow

import mlflow.sklearn

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn import datasets

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

from sklearn.svm import SVC

from sklearn.metrics import accuracy_score, f1_score, confusion_matrix

import itertools

import joblib- MLflow: Tracks experiments, logs parameters, metrics, and saves models.

- NumPy: Handles numerical data and array operations.

- Matplotlib/Seaborn: Visualizes performance metrics (e.g., confusion matrix).

- Scikit-learn: Provides datasets, preprocessing tools, SVM implementation, and evaluation metrics.

- Joblib: Saves the best model for deployment.

These imports collectively enable you to load data, preprocess it, train and evaluate an SVM model, track experiments using MLflow, and save the best model for deployment.

Load data and get ready for experiment

# Load the Digits dataset

digits = datasets.load_digits()

X, y = digits.data, digits.target

# Split the data

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Scale the data

scaler = StandardScaler()

X_train = scaler.fit_transform(X_train)

X_test = scaler.transform(X_test)

Loads the Digits dataset, which is a collection of 8×8 pixel images of handwritten digits (0-9).

Splits the dataset into training and testing subsets to evaluate the model’s performance on unseen data.

Scales the feature data to have a mean of 0 and a standard deviation of 1. This is important for SVM, as it is sensitive to the scale of the input features.

# Hyperparameters to test

C_values = [0.1, 1, 10]

gamma_values = [0.01, 0.1, 1]best_f1 = 0

best_model = None

best_params = {}

accuracies = []

f1_scores = []

configs = []Start the experiment

MLflow allows you to group related runs (e.g., different hyperparameter configurations, models, or datasets) under a single experiment. This makes it easier to compare and analyze results.

mlflow.set_experiment("Digits_SVM_Tracking")By setting an experiment, you can logically separate different projects or tasks. For example, all runs related to SVM on the Digits dataset can be grouped under the experiment "Digits_SVM_Tracking".

for C, gamma in itertools.product(C_values, gamma_values):Iterates over all combinations of C and gamma values using itertools.product.

with mlflow.start_run():Starts a new MLflow run to track the current experiment.

model = SVC(C=C, gamma=gamma, kernel='rbf', random_state=42)

model.fit(X_train, y_train)Trains an SVM model with the current hyperparameters.

y_pred = model.predict(X_test)

acc = accuracy_score(y_test, y_pred)

f1 = f1_score(y_test, y_pred, average='weighted')

cm = confusion_matrix(y_test, y_pred)Evaluates the model on the test set.

mlflow.log_param("C", C)

mlflow.log_param("gamma", gamma)

mlflow.log_metric("accuracy", acc)

mlflow.log_metric("f1_score", f1)Logs the hyperparameters and evaluation metrics in MLflow.

plt.figure(figsize=(6, 5))

sns.heatmap(cm, annot=True, fmt='d', cmap='Blues', xticklabels=digits.target_names, yticklabels=digits.target_names)

plt.xlabel('Predicted')

plt.ylabel('Actual')

plt.title(f'Confusion Matrix (C={C}, gamma={gamma})')

cm_filename = f"confusion_matrix_C{C}_gamma{gamma}.png"

plt.savefig(cm_filename)

plt.close()

mlflow.log_artifact(cm_filename)Visualizes and logs the confusion matrix as an artifact.

if f1 > best_f1:

best_f1 = f1

best_model = model

best_params = {"C": C, "gamma": gamma}Keeps track of the best model based on the F1 score.

accuracies.append(acc)

f1_scores.append(f1)

configs.append(f"C={C}, gamma={gamma}")Stores the results of each run for later comparison.

The entire code

for C, gamma in itertools.product(C_values, gamma_values):

with mlflow.start_run():

model = SVC(C=C, gamma=gamma, kernel='rbf', random_state=42)

model.fit(X_train, y_train)

y_pred = model.predict(X_test)

acc = accuracy_score(y_test, y_pred)

f1 = f1_score(y_test, y_pred, average='weighted')

cm = confusion_matrix(y_test, y_pred)

# Log parameters and metrics

mlflow.log_param("C", C)

mlflow.log_param("gamma", gamma)

mlflow.log_metric("accuracy", acc)

mlflow.log_metric("f1_score", f1)

# Save confusion matrix plot

plt.figure(figsize=(6, 5))

sns.heatmap(cm, annot=True, fmt='d', cmap='Blues', xticklabels=digits.target_names, yticklabels=digits.target_names)

plt.xlabel('Predicted')

plt.ylabel('Actual')

plt.title(f'Confusion Matrix (C={C}, gamma={gamma})')

cm_filename = f"confusion_matrix_C{C}_gamma{gamma}.png"

plt.savefig(cm_filename)

plt.close()

mlflow.log_artifact(cm_filename)

# Track the best model

if f1 > best_f1:

best_f1 = f1

best_model = model

best_params = {"C": C, "gamma": gamma}

accuracies.append(acc)

f1_scores.append(f1)

configs.append(f"C={C}, gamma={gamma}")

Save the best model

# Save the best model

if best_model:

model_filename = "best_svm_model_for_blog.pkl"

joblib.dump(best_model, model_filename)

mlflow.sklearn.log_model(best_model, "best_model")

mlflow.log_artifact(model_filename)

mlflow.log_params(best_params)This block of code saves the best-performing SVM model (identified during hyperparameter tuning) and logs it along with its parameters and artifacts in MLflow.

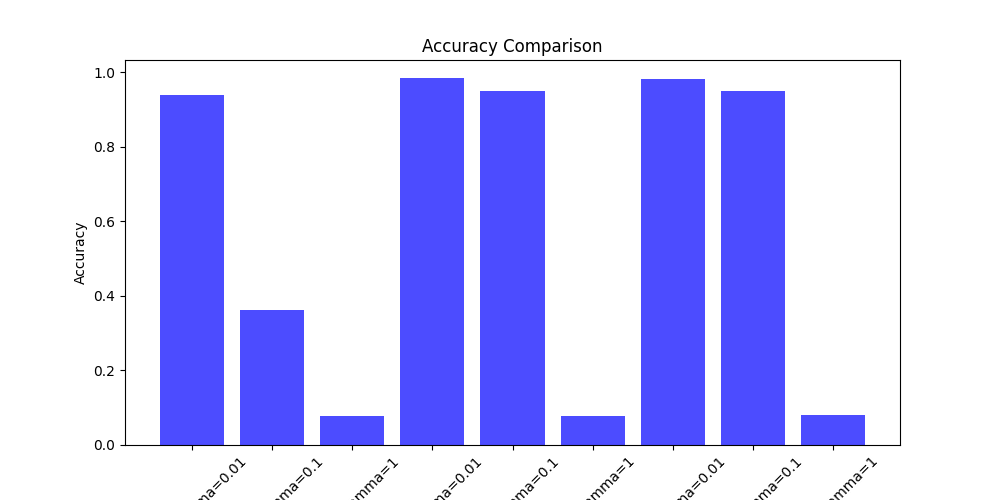

Comparison Plots

# Plot accuracy comparison

plt.figure(figsize=(10, 5))

plt.bar(configs, accuracies, color='blue', alpha=0.7)

plt.xlabel("Hyperparameter Configs")

plt.ylabel("Accuracy")

plt.title("Accuracy Comparison")

plt.xticks(rotation=45)

plt.savefig("accuracy_comparison.png")

mlflow.log_artifact("accuracy_comparison.png")

plt.close()This block of code creates a bar plot to compare the accuracy scores of different hyperparameter configurations (C and gamma) tested during the experiment. The plot is saved as an image file and logged as an artifact in MLflow.

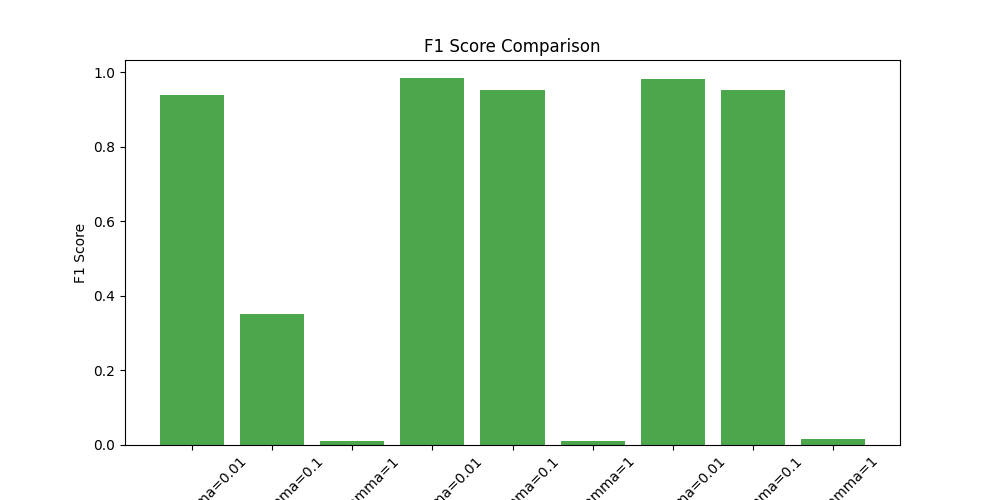

# Plot F1-score comparison

plt.figure(figsize=(10, 5))

plt.bar(configs, f1_scores, color='green', alpha=0.7)

plt.xlabel("Hyperparameter Configs")

plt.ylabel("F1 Score")

plt.title("F1 Score Comparison")

plt.xticks(rotation=45)

plt.savefig("f1_score_comparison.png")

mlflow.log_artifact("f1_score_comparison.png")

plt.close()

print(f"Best model parameters: {best_params}")This block of code creates a bar plot to compare the F1 scores of different hyperparameter configurations (C and gamma) tested during the experiment. The plot is saved as an image file and logged as an artifact in MLflow. Additionally, it prints the best hyperparameters found during the experiment.

Running the Code

Save the code in a .py file or .ipynb file and run it.

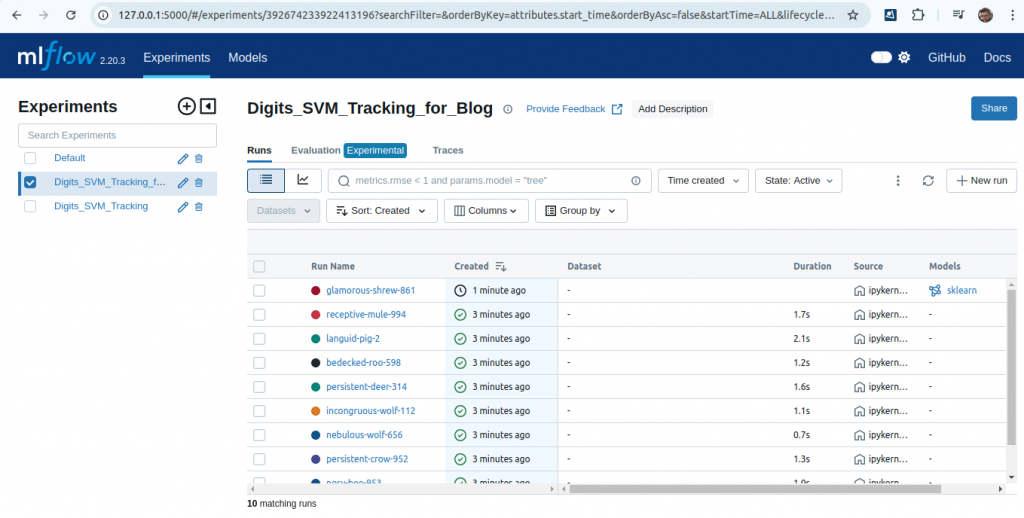

The mlflow ui

mlflow uiVisit http://127.0.0.1:5000/

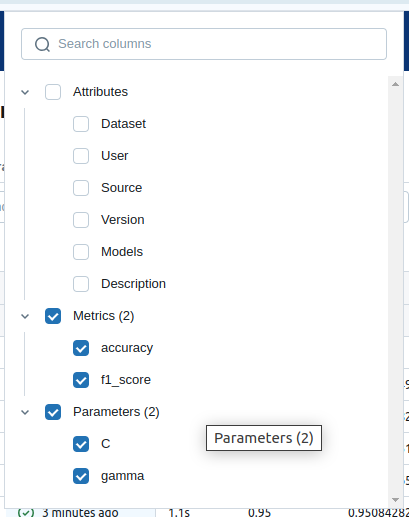

Choose following columns

Now you get a dataframe, you can download it or can do simple sorting, grouping in mlflow ui

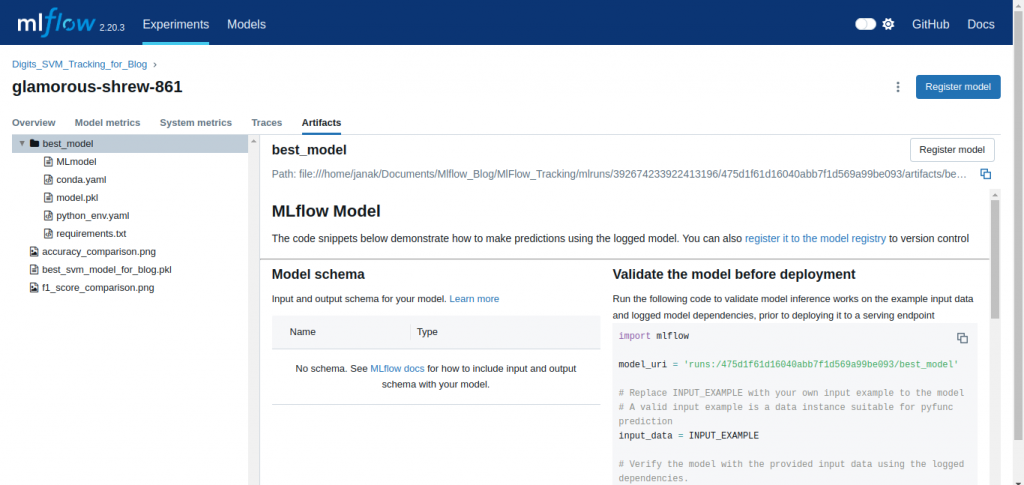

Our Best Model

Here you have model and the graphs doing to comparison of all the experiments.

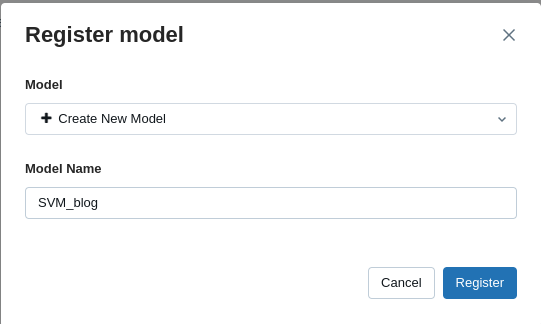

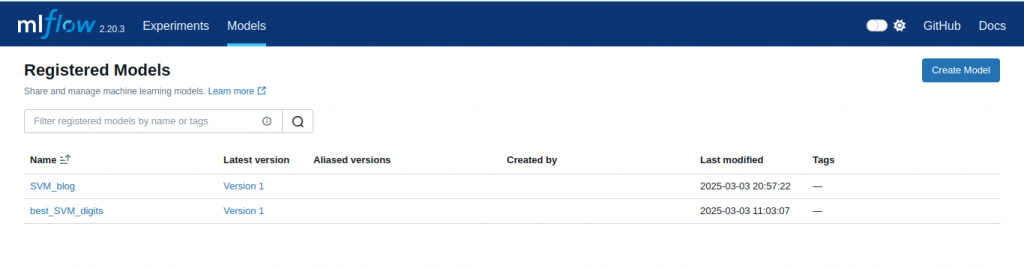

Register the Model

Click on Models

Click on the model you registered to see it details

Deploy the model locally

mlflow models serve -m models:/SVM_blog/1 -p 1234 --no-condaThe command mlflow models serve -m models:/SVM_blog/1 -p 1234 --no-conda is used to deploy a logged MLflow model as a local REST API server.

Test it

http://localhost:1234/invocationsIf the model is deployed correctly at port 1234 above request will return 405 error

Python code to use the api

Following code provides a complete workflow for loading, preprocessing, visualizing, and preparing data for inference using a deployed MLflow model. It is a practical example of how to interact with a machine learning model served as a REST API.

from sklearn import datasets

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

import matplotlib.pyplot as plt

import requests

# Load the Digits dataset

digits = datasets.load_digits()

X, y = digits.data, digits.target

# Split the data

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Scale the data

scaler = StandardScaler()

X_train = scaler.fit_transform(X_train)

sample_index = 5

# Get the corresponding image (before scaling)

original_image = X_test[sample_index].reshape(8, 8)

print(type(original_image))

# Save the image

# cv2.imwrite("output.png",original_image)

# Plot the image

plt.figure(figsize=(1,1))

plt.imshow(original_image, cmap='gray')

plt.colorbar()

plt.title(f"Sample Digit from X_test (Index {sample_index})")

plt.show()

X_test = scaler.transform(X_test)

sample = X_test[sample_index]

print(type(sample))

print(sample.shape)

sample_img = sample.reshape(8,8)

sample = sample.tolist()

# Prepare the input

data = {

"instances": [sample] # The sample data

}Following code sends a sample input to the deployed MLflow model’s REST API, retrieves the prediction, and handles potential errors. It is a critical step in testing and integrating machine learning models into production systems.

# Send the request

url = "http://localhost:1234/invocations"

headers = {"Content-Type": "application/json"}

response = requests.post(url, json=data, headers=headers)

# Print the prediction

if response.status_code == 200:

print("Prediction:", response.json())

else:

print("Error:", response.status_code, response.text)Output

Prediction: {'predictions': [1]}Conclusion

Using MLflow Tracking, we successfully:

- Organized and tracked multiple experiments.

- Identified the best hyperparameters for the SVM model.

- Saved and logged the best model for reproducibility.

- Deployed the model as a REST API for real-time inference.

MLflow simplifies the machine learning lifecycle by providing tools for experiment tracking, model management, and deployment. Whether you’re working on a small project or a large-scale system, MLflow can help you stay organized and efficient.

Next Steps

- Integrate MLflow with cloud platforms like AWS, Azure, or GCP for scalable deployments.

- Experiment with other datasets and algorithms to further enhance your ML workflow.

By following this guide, you can leverage MLflow to streamline your machine learning projects and focus on building better models. Happy tracking!